Computer Architecture Fundamentals Quiz Practice

Test Hardware Organization and System Design Skills

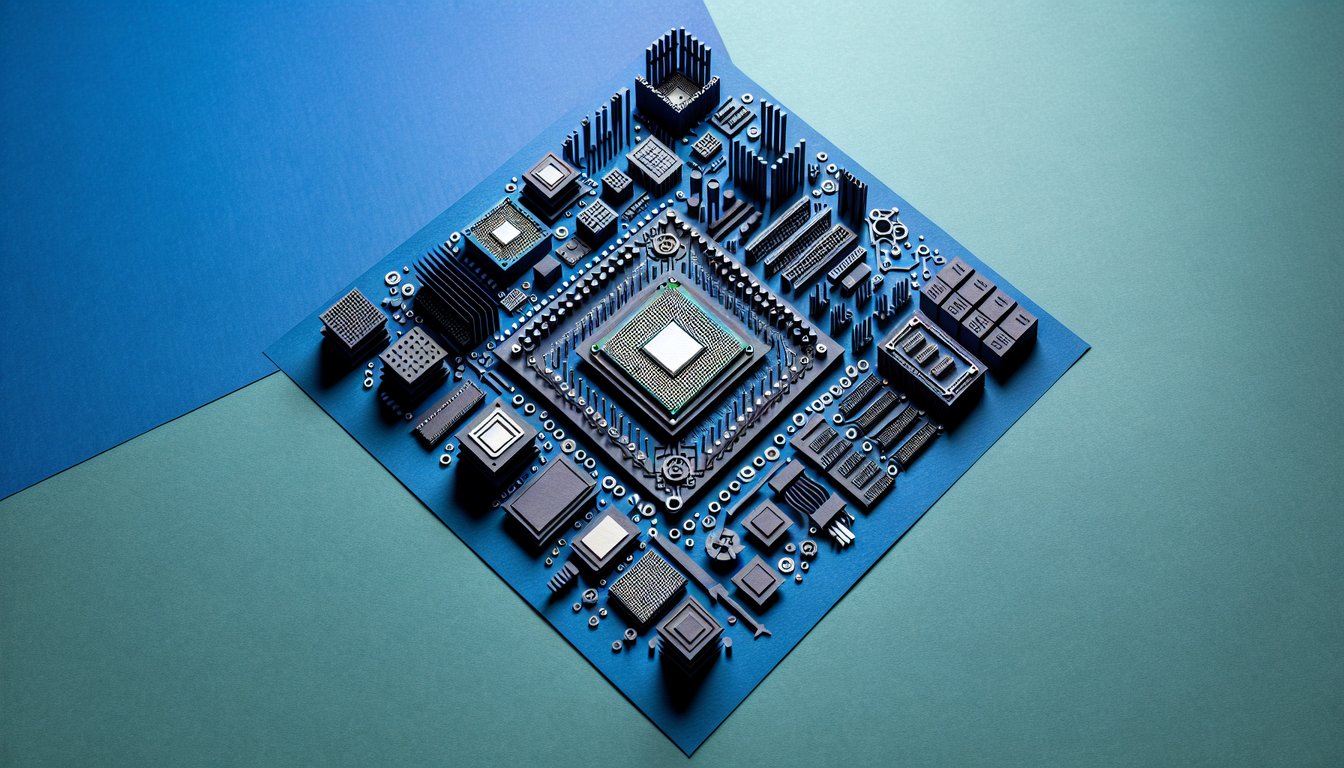

Dive into this comprehensive Computer Architecture Quiz designed to sharpen your understanding of CPU design, memory hierarchy, and system-level performance. Ideal for students and educators seeking a practical challenge, this practice quiz can be freely modified in the editor to tailor questions to your curriculum. As you work through each multiple-choice question, you will apply concepts from both Digital Logic and Computer Architecture Quiz frameworks and core fundamentals. Track your progress, deepen your hardware organization skills, and explore more engaging quizzes to reinforce your learning.

Learning Outcomes

- Analyse CPU components and performance trade-offs.

- Identify memory hierarchy roles and cache mechanisms.

- Evaluate instruction set architecture principles.

- Demonstrate understanding of pipelining and hazards.

- Apply bus structures and I/O system concepts.

- Master parallel processing and concurrency fundamentals.

Cheat Sheet

- Understand CPU Components and Performance Trade-offs - Dive into the heart of your computer by exploring the ALU, control unit, and registers that power every calculation. You'll learn how design choices like clock speed, core count, and power consumption shape overall efficiency. Balancing these factors is key to crafting a CPU that can handle heavy tasks without overheating. CPU Microarchitecture

- Explore Memory Hierarchy and Cache Mechanisms - Journey through the layers of memory from blazing-fast registers to L1, L2, and L3 caches, all the way down to main RAM. Discover how smart caching policies like write-through and write-back cut access times and keep your programs running smoothly. Get ready to optimize data flow and see why memory hierarchy is the unsung hero of performance. Cache Hierarchy

- Evaluate Instruction Set Architecture (ISA) Principles - Unlock the blueprint of CPU commands by studying how ISAs define every operation your processor can execute. Compare the philosophies behind CISC's rich instruction repertoire and RISC's lean, mean instruction set to see how they influence speed and complexity. This foundational knowledge will sharpen your programming and system-design skills. Instruction Set Architecture

- Master Pipelining and Hazard Mitigation - Supercharge CPU throughput with pipelining, where multiple instruction stages overlap like a finely choreographed dance. Learn to spot data, control, and structural hazards that can trip up the pipeline and explore techniques like forwarding and branch prediction to keep things flowing. By mastering these tricks, you'll prevent stalls and boost overall performance. CPU Pipelining

- Apply Bus Structures and I/O System Concepts - Explore the highways of data transfer that connect the CPU, memory, and peripherals, and understand how bus widths and protocols influence speed. Dive into I/O systems to see how your computer communicates with keyboards, disks, and networks. This knowledge helps you design balanced systems that avoid bottlenecks. Bus Architecture

- Grasp Parallel Processing and Concurrency Fundamentals - Harness the power of multi-core and multi-threading to tackle big problems faster than ever. Uncover the challenges of concurrency, including race conditions and deadlocks, and learn synchronization techniques like locks and semaphores. These tools will let you write code that's both speedy and safe. Parallel Computing

- Analyze Microarchitecture Design and Its Impact - Delve deeper into how an ISA is brought to life through microarchitecture choices, impacting throughput, power use, and die size. Examine advanced features like superscalar execution, out-of-order completion, and branch prediction for a competitive edge. You'll see how each design tweak can unlock new levels of performance. Microarchitecture Details

- Understand the Role of Control Units in CPUs - Meet the brain within the brain: the control unit that orchestrates instruction decoding, sequencing, and execution. Learn how it generates control signals, manages pipelines, and ensures data moves to the right place at the right time. A solid grasp of this component is essential for understanding CPU choreography. CPU Control Unit

- Explore the Importance of Clock Cycles and Timing - Unravel how clock cycles act as the metronome of your CPU, dictating when each instruction stage ticks forward. Study factors like clock rate, cycle efficiency, and pipeline depth to understand their impact on overall throughput. Timing mastery helps you predict performance and avoid timing-related bugs. Clock Rate

- Study the Evolution of Processor Architectures - Trace the journey from early single-core designs to today's multi-core, heterogeneous processors that juggle CPUs, GPUs, and specialized accelerators. See how shifts in transistor density, power budgets, and workload demands have driven innovation. This historical perspective will spark ideas for future breakthroughs. Processor Architecture History